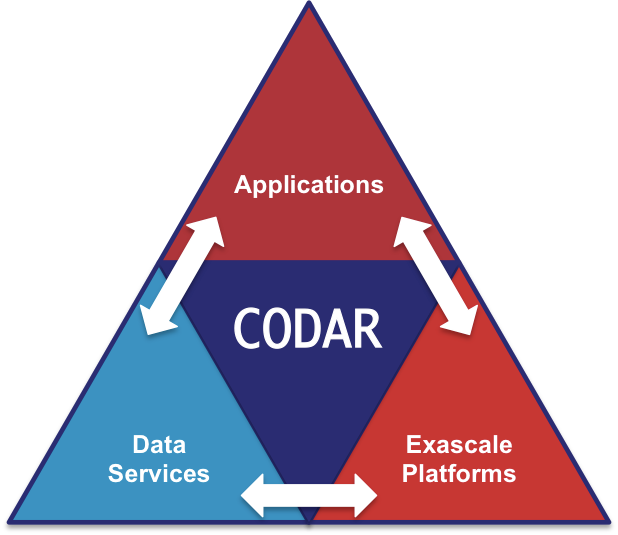

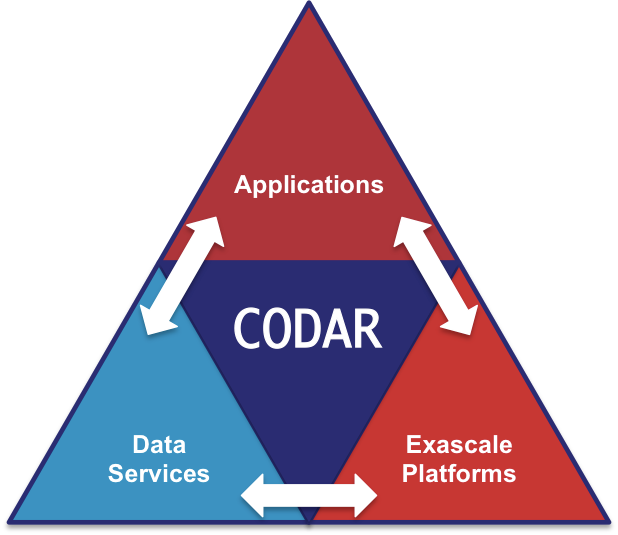

This site has been established as part of the ECP CODAR project.

This site provides reference scientific datasets, data reduction techniques, error metrics, error controls and error assessment tools for users and developers of scientific data reduction techniques.

Important: when publishing results from one or more datasets presented in this webpage, please:

● Cite: SDRBench: https://sdrbench.github.io

● Please also cite: K. Zhao, S. Di, X. Liang, S. Li, D. Tao, J. Bessac, Z. Chen, and F. Cappello, “SDRBench: Scientific Data Reduction Benchmark for Lossy Compressors”, International Workshop on Big Data Reduction (IWBDR2020), in conjunction with IEEE Bigdata20.

● Acknowledge: the source of the dataset you used, the DOE NNSA ECP project, and the ECP CODAR project.

● Check: the condition of publications (some dataset sources request prior check)

● Contact: the compressor authors to get the correct compressor configuration according to each dataset and each comparison metrics.

● Dimension: the order of the dimensions shown in the 'Format' column of the table is in row-major order (aka. C order), which is consistent with well-known I/O libraries such as HDF5. For example, for the CESM-ATM dataset (1800 x 3600), 1800 is higher dimension (changing slower) and 3600 is lower dimension (changing faster). For most compressors (such as SZ, ZFP and FPZIP), the dimensions should be given in the reverse order (such as -2 3600 1800) for their executables. If you are not sure about the order of dimension, one simple method is trying different dimension orders and selecting the results with highest compression ratios.

Data sets:

Name |

Type |

Format |

Size (data) |

Command Examples |

Link |

||||||||||||||||||||||||||||||||||||

CESM-ATM Source: Mark Taylor (SNL) |

Climate simulation

|

Dataset1 : 79 fields: 2D, 1800 x 3600 ; Dataset2 : 1 field : 3D, 26x1800x3600. Both are single precision, binary

|

Dataset1 (raw): 1.47GB Dataset1 (cleared): 1.47GB Dataset2: 17GB

(cleared data zeroed all background data) |

SZ(Compress): sz -z -f -i CLDHGH_1_1800_3600.f32 -M REL -R 1E-2 -2 3600 1800 SZ(Decompress): sz -x -f -i CLDHGH_1_1800_3600.f32 -2 3600 1800 -s CLDHGH_1_1800_3600.f32.sz -a ZFP: zfp -f -i CLDHGH_1_1800_3600.f32 -z CLDHGH_1_1800_3600.f32.zfp -o CLDHGH_1_1800_3600.f32.zfp.out -2 3600 1800 -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i CLDHGH_1_1800_3600.f32 -d 3600 -d 1800 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

Z-checker-installer: ./runZCCase.sh -f REL CESM-ATM raw-data-dir f32 3600 1800 |

|

||||||||||||||||||||||||||||||||||||

EXAALT Source: EXAALT team This dataset has been approved for unlimited release by Los Alamos National Laboratory and has been assigned LA-UR-18-25670. |

Molecular dynamics simulation |

6 fields: x,y,z,vx,vy,vz, Each field stored separately, Single precision, Binary, Little-endian

|

Dataset1: 60 MB Dataset2: 973 MB Dataset3: 2.4 GB |

SZ(Compress): sz -z -f -i xx.f32 -M REL -R 1E-2 -1 2869440 SZ(Decompress): sz -x -f -i xx.f32 -1 2869440 -s xx.f32.sz -a ZFP: zfp -f -i xx.f32 -z xx.f32.zfp -o xx.f32.zfp.out -1 2869440 -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i xx.f32 -d 2869440 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|||||||||||||||||||||||||||||||||||||

Hurricane ISABEL Source: |

Weather simulation

|

13 fields: 3D, 100x500x500, single-precision, binary (cleared dataset by replacing background by 0)

|

1.25GB

|

SZ(Compress): sz -z -f -i Pf48.bin.f32 -M REL -R 1E-2 -3 500 500 100 SZ(Decompress): sz -x -f -i Pf48.bin.f32 -3 500 500 100 -s Pf48.bin.f32.sz -a ZFP: zfp -f -i Pf48.bin.f32 -3 500 500 100 -z Pf48.bin.f32.zfp -o Pf48.bin.f32.zfp.out -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i Pf48.bin.f32 -d 500 -d 500 -d 100 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc… Z-checker-installer: ./runZCCase.sh -f REL Hurricane raw-data-dir f32 500 500 100 |

|

||||||||||||||||||||||||||||||||||||

EXAFEL Source: LCLS |

Images from the LCLS instrument |

1 field: 2D, Single precision HDF5 and binary

|

51 MB, 8.5 GB, 1GB |

SZ(Compress): sz -z -f -i EXAFEL-LCLS-986x32x185x388.f32 -M REL -R 1E-2 -2 388 5837120 SZ(Decompress): sz -x -f -i EXAFEL-LCLS-986x32x185x388.f32 -s EXAFEL-LCLS-986x32x185x388.f32.sz -2 388 5837120 -a ZFP: zfp -f -a 1E-2 -i EXAFEL-LCLS-986x32x185x388.f32 -z EXAFEL-LCLS-986x32x185x388.f32.zfp -o EXAFEL-LCLS-986x32x185x388.f32.zfp.out -2 388 5837120 -s LibPressio: pressio -b compressor=$COMP -i EXAFEL-LCLS-986x32x185x388.f32 -d 5837120 -d 388 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|

||||||||||||||||||||||||||||||||||||

HACC Source: HACC team (ECP EXASKY) |

Cosmology: particle simulation

|

1 snapshot: 6 fields x,y,z,vx,vy,vz) Each field stored separately, Single precision, Binary, Little-endian

|

19 GB

5 GB |

SZ(Compress): sz -z -f -i xx.f32 -M ABS -A 0.003 -1 1073726487 SZ(Decompress): sz -x -f -i xx.f32 -s xx.f32.sz -1 1073726487 -a ZFP: zfp -f -i xx.f32 -z xx.f32.zfp -o xx.f32.zfp.out -a 0.003 -1 1073726487 LibPressio: pressio -b compressor=$COMP -i xx.f32 -d 1073726487 -t float -o abs=3e-3 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|||||||||||||||||||||||||||||||||||||

NYX Source: Lukic et al. “methods: numerical, intergalactic medium, quasars: absorption lines, large-scale structure of universe”, journal of Monthly Notices of Royal Astronomical Society |

Cosmology: Adaptive mesh hydrodynamics + N-body cosmological simulation |

6 fields, 3D, 512 x 512 x 512 Single precision, Binary, Little-endian

|

2.7 GB |

SZ(Compress): sz -z -f -i temperature.f32 -M REL -R 1E-2 -3 512 512 512 SZ(Decompress): sz -x -f -i temperature.f32 -s temperature.f32.sz -3 512 512 512 -a ZFP: zfp -f -i temperature.f32 -z temperature.f32.zfp -o temperature.f32.zfp.out -3 512 512 512 -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i temperature.f32 -d 512 -d 512 -d 512 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|||||||||||||||||||||||||||||||||||||

NWChem Source: Example molecular 2-electron integral values generated by libint, a library developed by the Valeev research group at Virginia Tech. See https://github.com/evaleev/libint Libint is an integral evaluation option in NWChemEx. |

Two-electron repulsion integrals computed over Gaussian-type orbital basis sets |

3 fields, 1D, Double precision, Binary, Little-endian |

16GB |

SZ(Compress): sz -z -d -i 631-tst.bin.d64 -M REL -R 1E-2 -1 102953248 SZ(Decompress): sz -x -d -i 631-tst.bin.d64 -s 631-tst.bin.d64.sz -1 102953248 -a ZFP: zfp -d -i 631-tst.bin.d64 -z 631-tst.bin.d64.zfp -o 631-tst.bin.d64.zfp.out -a 1E-2 -1 102953248 -s LibPressio: pressio -b compressor=$COMP -i 631-tst.bin.d64 -d 102953248 -t double -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|

||||||||||||||||||||||||||||||||||||

SCALE-LETKF Source: simulation data are generated by The Local Ensemble Transform Kalman Filter (LETKF) data assimilation package for the SCALE-RM weather model (provided by RIKEN) Contact: Guo-yuan Lien (guoyuan.lien@gmail.com) |

Climate simulation |

13 fields, 3D, Single precision, binary, little-endian

|

4.9GB |

SZ(Compress): sz -f -z -i T-98x1200x1200.f32 -M REL -R 1E-2 -3 1200 1200 98 SZ(Decompress): sz -f -x -i T-98x1200x1200.f32 -s T-98x1200x1200.f32.sz -3 1200 1200 98 -a ZFP: zfp -f -i T-98x1200x1200.f32 -z T-98x1200x1200.f32.zfp -o T-98x1200x1200.f32.zfp.out -a 1E-2 -3 1200 1200 98 -s LibPressio: pressio -b compressor=$COMP -i T-98x1200x1200.f32 -d 1200 -d 1200 -d 98 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|||||||||||||||||||||||||||||||||||||

QMCPACK Source: QMCPACK performance test (contact: Ye Luo: yeluo@anl.gov) |

Many-body ab initio Quantum Monte Carlo (electronic structure of atoms, molecules, and solids) |

1 field, 288 orbitals, 3D, 69 x 69 x 115, Single precision and Binary, Little endian

|

1 GB |

SZ(Compress): sz -z -f -i einspline_288_115_69_69.pre.f32 -M REL -R 1E-2 -3 69 69 33120 SZ(Decompress): sz -x -f -i einspline_288_115_69_69.pre.f32 -s einspline_288_115_69_69.pre.f32.sz -3 69 69 33120 -a ZFP: zfp -f -i einspline_288_115_69_69.pre.f32 -z einspline_288_115_69_69.pre.f32.zfp -o einspline_288_115_69_69.pre.f32.zfp.out -a 1E-2 -3 69 69 33120 LibPressio: pressio -b compressor=$COMP -i einspline_288_115_69_69.pre.f32 -d 69 -d 69 -d 33120 -t float -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc… Z-checker-installer: ./runZCCase.sh -f REL QMCPack raw-data-dir f32 69 69 33120 |

|||||||||||||||||||||||||||||||||||||

Miranda Source: hydrodynamics code for large turbulence simulations (contact: Peter Lindstrom lindstrom2@llnl.gov) |

hydrodynamics code for large turbulence simulations |

3D, totally 7 fields, each field is 256x384x384, Double precision and Binary, Little endian

|

Small 1.87GB Big 106GB |

SZ(Compress): sz -z -d -i density.d64 -M REL -R 1E-2 -3 384 384 256 SZ(Decompress): sz -x -d -i density.d64 -s density.d64.sz -3 384 384 256 -a ZFP: zfp -d -3 384 384 256 -i density.d64 -z density.d64.zfp -o density.d64.zfp.out -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i density.d64 -d 384 -d 384 -d 256 -t double -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

Small (256x384x384)

Big (3072x3072x3072)

|

||||||||||||||||||||||||||||||||||||

S3D Source: Kolla, Hemanth (hnkolla@sandia.gov) |

Combustion simulation

|

11 fields, 3D, 500x500x500, Double precision Binary, Little-endian

|

44 GB |

SZ(Compress): sz -d -z -i stat_planar.1.1000E-03.field.d64 -M REL -R 1E-2 -3 500 500 500 SZ(Decompress): sz -d -x -i stat_planar.1.1000E-03.field.d64 -s stat_planar.1.1000E-03.field.d64 -3 500 500 500 -a ZFP: zfp -d -3 500 500 500 -i stat_planar.1.1000E-03.field.d64 -z stat_planar.1.1000E-03.field.d64.zfp -o stat_planar.1.1000E-03.field.d64.zfp.out -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i stat_planar.1.1000E-03.field.d64 -d 500 -d 500 -d 500 -t double -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc…

|

|||||||||||||||||||||||||||||||||||||

XGC Source: Princeton Plasma Physics Laboratory (PPPL) https://jychoi-hpc.github.io/adios-python-docs/XGC-mesh-and-field-data.html |

Fusion Simulation |

9 timesteps, 3D, unstructured mesh (the mesh data is in the archive), 20694x512, Double precision Binary, Little-endian |

1.2 GB |

SZ(Compress): NA SZ(Decompress): NA ZFP: NA LibPressio: N/A Z-checker-installer: NA

(the files are stored in .bp format, you need to install ADIOS to read them)

|

Dataset (stored in Adios2) Dataset (stored in binary format) |

||||||||||||||||||||||||||||||||||||

NSTX GPI Source: Michael Churchill, Princeton Plasma Physics Laboratory (PPPL) - Copyright: Free to use but need to check with Michael Churchil (rchurchi@pppl.gov) before publishing results. |

Fusion Gas Puff Image (GPI) data |

369,357 steps, 2D time-series data (movie), 80x64 image with Double precision Binary, Little-endian |

4.1 GB |

SZ(Compress): NA SZ(Decompress): NA ZFP: NA LibPressio: N/A Z-checker-installer: NA

(the files are stored in .bp format, you need to install ADIOS to read them) |

|||||||||||||||||||||||||||||||||||||

Brown Samples Source: Brown University |

Synthetic, generated to specified regularity |

1D, Double precision Binary, Little-endian (3 datasets with 3 different regularity)

|

256 MB 256 MB 256 MB |

SZ(Compress): sz -z -d -i sample_r_B_0.5_26.d64 -M REL -R 1E-2 -1 33554433 SZ(Decompress): sz -x -d -i sample_r_B_0.5_26.d64 -s sample_r_B_0.5_26.d64.sz -1 33554433 -a ZFP: zfp -d -1 33554433 -i sample_r_B_0.5_26.d64 -z sample_r_B_0.5_26.d64.zfp -o sample_r_B_0.5_26.d64.zfp.out -a 1E-2 -s LibPressio: pressio -b compressor=$COMP -i sample_r_B_0.5_26.d64 -d 33554433 -t double -o rel=1e-2 -m time -m size -M all where $COMP can be sz, zfp, sz3, mgard, etc… Z-checker-installer: ./runZCCase.sh -d REL Brown-samples raw-data-dir d64 33554433 |

Note: This table will be augmented with metrics that matter for users of these datasets as well as recommended settings for error control (lossy compression).

In general, the extension of the data file is named in the following convention:

● .f32 means single-precision floating point data in little-endian type

● .F32 means single-precision floating point data in big-endian type

● .f64 means double-precision floating point data in little-endian type

● .F64 means double-precision floating point data in big-endian type

Others: please submit your proposal of datasets to codar-reduction (at) cels.anl.gov. Requirements: datasets will be open to public access. Dataset should be linked to a simulation application or a scientific instrument. Metadata should explain the source origin of the dataset and how it has been produced (what simulation, what instrument, what settings). Upon review by the SDRBenchmarks committee, the dataset will (or will not) be added to the SDRBenchmarks repository.

Lossy compressors:

● SZ: https://github.com/szcompressor/SZ

● ZFP: https://github.com/LLNL/zfp

● TTHRESH: https://github.com/rballester/tthresh

● MGARD: https://github.com/CODARcode/MGARD

● SPERR: https://github.com/NCAR/SPERR

● ISABELA: http://freescience.org/cs/ISABELA/ISABELA.html

● PFPL: https://github.com/burtscher/PFPL

● DCTZ: https://github.com/swson/DCTZ

● Digit rounding: https://github.com/CNES/Digit_Rounding

(standalone version: https://github.com/disheng222/digitroundingZ )

● Bit grooming: https://github.com/nco/nco

(standalone version: https://github.com/disheng222/BitGroomingZ.git )

● Others: please submit your suggestion of lossy compressor, with github link to codar-reduction (at) cels.anl.gov

Unifying generic Interface for Compression:

● LibPressio: https://robertu94.github.io/libpressio/

Lossless compressors:

● FPZIP: https://computation.llnl.gov/projects/floating-point-compression

● ZFP: https://github.com/LLNL/zfp

● FPC: http://cs.txstate.edu/~burtscher/research/FPC/.

● pFPC (parallel) http://cs.txstate.edu/~burtscher/research/pFPC/.

● SPDP http://cs.txstate.edu/~burtscher/research/SPDP/.

● GFC (GPUs): http://cs.txstate.edu/~burtscher/research/GFC/

● MPC (GPUs): http://cs.txstate.edu/~burtscher/research/MPC

● BLOSC: https://github.com/Blosc/c-blosc

● Fpcompress: https://github.com/burtscher/FPcompress/

● Others: please submit your suggestion of lossless compressor, with github link to codar-reduction (at) cels.anl.gov

Unifying generic Interface for Compression (lossy and lossless):

LibPressio: https://robertu94.github.io/libpressio/

Commonly used metrics for reduction technique assessment:

● Reduction/reconstruction rate in [G|M|K]B/s

● Reduction ratio (Initial size/compressed size)

● [Lossy] Rate distortion (PSNR at different bit rates)

● [Lossy] PSNR in dB, MSE, RMSE and NRMSE

● [Lossy] SSIM (structural similarity index)

● [Lossy] Pearson correlation of the initial and reconstructed dataset

● [Lossy] Autocorrelation of the compression error (1D, … nD)

● [Lossy] Spectral alteration (difference of power spectrum)

● [Lossy] Preservation of the n order derivatives

● Others: please submit your suggestion of assessment metric, to codar-reduction (at) cels.anl.gov

Error controls:

● [Common] Point wise absolute error bound

● [Common] Point wise relative error bound

● [Variation] Value range point wise relative error bound

● [Other] Fixed PSNR

Assessment tools, metrics and error control:

● Z-checker: https://github.com/CODARcode/Z-checker

● Foresight: https://github.com/lanl/VizAly-Foresight

● ldcpy: https://github.com/NCAR/ldcpy

● SFS https://github.com/JulianKunkel/statistical-file-scanner

● Others: please submit your suggestion of assessment tools, with github link to codar-reduction (at) cels.anl.gov

Contributors/maintainers:

● F. Cappello (ANL, lead),

● M. Ainsworth (Brown University),

● J. Bessac (ANL),

● Martin Burtscher (Texas State University),

● Jong Youl Choi (ORNL)

● E. Constantinescu (ANL),

● S. Di (ANL),

● H. Guo (ANL),

● Peter Lindstrom (LLNL),

● Ozan Tugluk (Brown University).

We sincerely acknowledge that the following contributors agreed on the release of their scientific datasets:

Salman Habib (ANL), Katrin Heitmann (ANL), Zarija Lubik (LBNL), Rob Jacob (ANL), Danny Perez (LANL), Chun Hong Yoon (SLAC), Kristopher W. Keipert (ANL), Guo-Yuan Lien (Central Weather Bureau Taiwan), Ye Luo (ANL), Peter Lindstrom (LLNL), Hemanth Kolla (SNL), Jong Choi (ORNL), Michael Churchill (PPPL), Ozan Tugluk (Brown University)

Sponsors: